- At its recent Google Cloud Next ‘22 get-together, the hyperscaler pledged itself to radical openness and to the development of the “most open data cloud ecosystem”

- To that end, it made a flurry of announcements about the steps it’s taking to unify data across multiple sources and platforms

- Could this be an important step towards an ‘unlocked’ cloud?

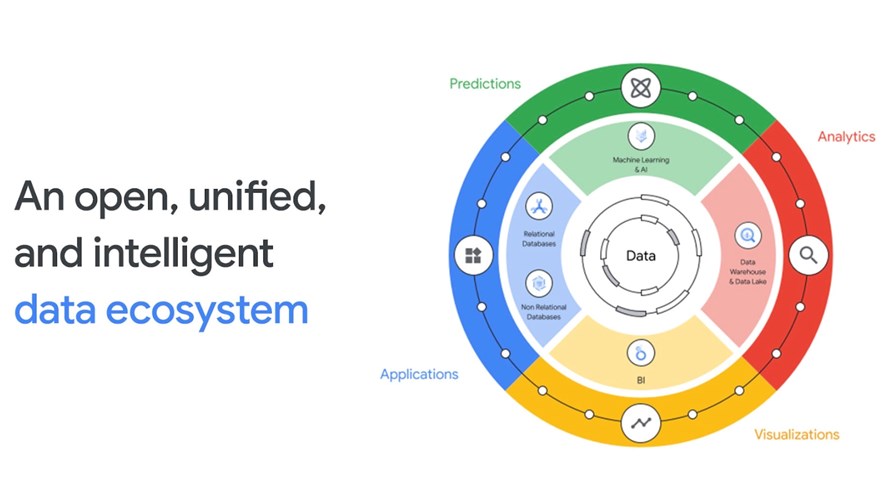

Google has long pledged undying fealty to the God of Openness across a whole range of technologies and issues going back many years, including open source, open standards and, this year especially, open data. During its recent Google Cloud Next ‘22 event, it stated that its goal was to unify all data, from all sources, across any platform.

According to Gerrit Kazmaier, VP and GM of database, data analytics and looker at Google, writing in the company’s data analytics blog: “Data complexity is at an all-time high… and only an open data cloud ecosystem can unlock the full potential of data and remove the barriers to digital transformation.”

There’s no doubt that Google has set itself a huge and protracted task with this one and an avalanche of announcements was made at the event to help it all along – though the question “How long will it take?” is as open as Google would like the cloud to be.

So what’s it doing?

Already this year it has launched the Data Cloud Alliance, which it says is today supported by 17 companies, with the objective of promoting open standards and interoperability between popular data applications. In addition, Google claims that more than 800 software companies are currently beavering away on applications using Google’s Data Cloud and it has more than 40 data platform partners offering integrations to other Google Cloud customers through Google Cloud Ready – BigQuery.

Google offers validation for these integrations. When it comes to analytics, it points out that up to 90% of stored data is unstructured but still highly valuable. Given its leading position in cloud analytics, Google (and everyone else) can clearly see the advantages it might win by promoting data file compatibility so that, for instance, both the structured and unstructured data compiled by non-Google applications and submerged in diverse file formats under various Google and non-Google data lakes, can be queried and acted upon by analytics tools.

But, of course, that increased data file openness might see this process sometimes flowing in the opposite direction, with Google customers’ data being accessed and analysed by competing analytics applications.

The Google philosophy is that this is ‘all good’ because it’s what customers want and if customers can be given what they want, it will all work out in Google Cloud’s favour in the longer term.

So what are some of the recently announced unification initiatives? (There are many: see the Google Cloud Data Analytics blog). As a taster, Google Cloud has announced new or expanded integrations with MongoDB, Collibra, Elastic and others. Major data formats, such as Apache Iceberg and the Linux Foundation’s Delta Lake, are getting extra support within Google Cloud and it’s enhancing its ability to analyse unstructured and streaming data in BigQuery, which is pretty much the analytics centrepiece for Google Cloud and enables companies to analyse massive amounts of data.

Open all hours

Google’s completely open cloud destination is a big promise and is unlikely to be fully met any time soon. But establishing the direction of travel is the important thing and Google is clearly convinced by its own history that its enthusiasm for openness will pay off in the long run.

But for telcos, there’s another important aspect: Google’s open data push may make the hyperscaler a more valuable partner for the telco cloud and for service delivery to telco customers but, despite a softening in attitudes (perhaps in step with all the telco/hyperscaler alliances formed over the past year or so), many individuals in the industry are still wary when it comes to working with hyperscalers.

Despite the industry’s obsession with standardisation, telcos have historically struggled to standardise rigorously enough to easily mix their legacy environments or to extricate themselves from one vendor in favour of another across, perhaps, thousands of telco sites.

Since equipment and system lock-in has long been a perceived problem with the incumbent telecoms equipment vendors, it’s hardly surprising there is a concern that lock-in tendencies may be even worse if telcos simply swap their current set of dominant providers in favour of replacements bearing ever more powerful and difficult-to-master technology.

It’s a complex, expensive and time-consuming business to rip and replace legacy kit: It takes one fortune to execute the ripping and another fortune to integrate and test the replacements. The rip-and-replace difficulty became so pressing in the UK that BT was granted nearly an extra year to swap out its Huawei kit, which had been banished in the wake of government concern around national security. Now, the original January 2023 deadline to complete the exercise has been extended to December 2023.

Lock-in fear is on the slide

But attitudes are softening. Officially, at least, the old lock-in fear appears to have been put on the backburner as telcos scramble to arrange deep partnerships with the hyperscalers that, after all, know a thing or two about virtualisation and cloud technologies and are well placed to work with telcos on their journey to cloud-native transformation. Google’s efforts with its open cloud target is one more encouragement for telco/hyperscaler partnerships and there are also good reasons why lock-in with the telecom cloud environment is no longer the massive fear it once was (see - Polygamy at the cutting edge: How open relationships fuel the telco cloud).

With Google, its increasingly open telco cloud has also been helped along by initiatives such as Kubernetes, which enables containerised applications to be ported to different cloud infrastructures, and the multicloud-enabling Anthos for Telecom. Factor in too a general trend to openness by the other hyperscalers, along with users’ increasing use of a multicloud approach, and it starts to look as though cloud “lock-in” is increasingly just a hung-over perception rather than the result of a long-term strategy promulgated by cloud infrastructure and service providers.

So where might it all end? Ideally, with a full ‘cloud-of-clouds’ ecosystem with very few limits as to what data can be used on which cloud application and within which cloud infrastructure, with artificially intelligent analytics ensuring the whole interlocking system behaves itself. Well, we live in hope.

Email Newsletters

Sign up to receive TelecomTV's top news and videos, plus exclusive subscriber-only content direct to your inbox.