Source: Nvidia

- Nvidia has unveiled its mobile network infrastructure plan

- It believes its capital-intensive proposition will appeal to mobile operator CFOs

- Senior VP of telecom unveils compute module, dubbed ARC-1, with flexible deployment potential for RAN and AI workload support

- Should Nvidia’s proposed AI-RAN architecture gain traction with network operators, it could herald a much larger upheaval in the radio access network (RAN) technology sector

- But questions regarding network operator capital investment potential are still being sidestepped and power consumption is bound to be a concern

Nvidia positioned itself firmly as a radio access network (RAN) technology player this week with the launch of its AI Aerial hardware/software combo solution and its AI-RAN R&D partnership with T-Mobile US, Ericsson and Nokia, moves that (quite rightly) attracted a lot of attention and headlines. And the AI chip giant’s aspirations became even clearer following a media and analyst briefing on 19 September that will give a lot of big names across the network infrastructure ecosystem food for thought.

That’s because of the way Nvidia is testing the RAN waters with an open compute system that, ultimately, could bite the hands of the traditional network infrastructure giants with which it is exploring the potential of AI-RAN architectures.

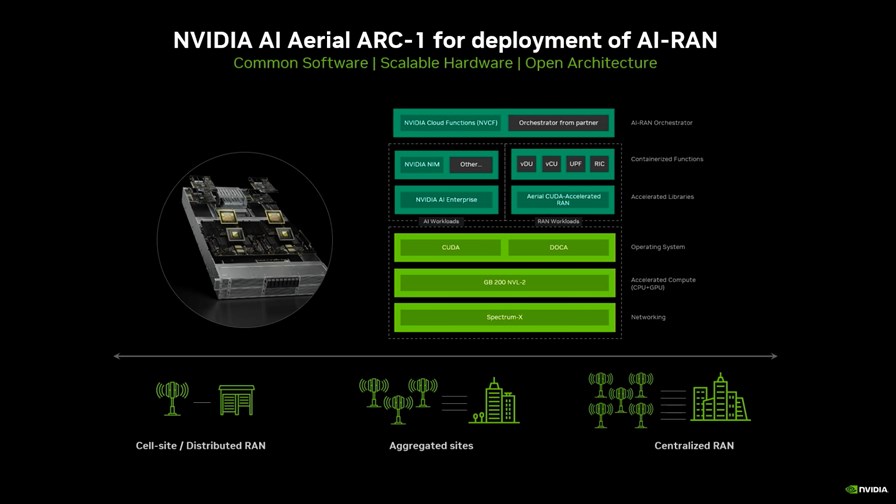

During the briefing, Nvidia’s senior VP of telecom, Ronnie Vasishta, referenced the role of the company’s ARC-1 platform (see image, above) in its pitch to the mobile operator community. This product has not previously been mentioned or unveiled in any of the company’s announcements or presentations, a point made in a question put to Vasishta by Heavy Reading’s senior principal analyst for mobile networks, Gabriel Brown.

The ARC-1 curveball

According to Vasishta, ARC-1 is essentially the AI Aerial computer, comprising Nvidia’s GB200 NVL2, a ‘superchip’ that comprises two Grace central processing units (CPUs) and two Blackwell graphics processing units (GPUs) to deliver 1.3 Tbytes of shared memory, and the vendor’s Spectrum-X Ethernet networking platform for connectivity. This, then, essentially acts as the baseband processing platform for a RAN and without the need for any additional acceleration modules, and pitches Nvidia as a competitive supplier of physical layer/Layer 1 hardware to mobile network operators, a role that Nvidia doesn’t currently play (yet!).

That Layer 1 hardware runs Nvidia CUDA software libraries as the operating system for AI workloads and the company’s DOCA software as the RAN operating system to support the mobile networking functionality that, according to Nvidia, can be provided by any vendor that has developed standards-compatible software.

According to Vasishta, the ARC-1 has the muscle to support fronthaul connectivity as well as AI workloads, and comes in a form factor that can be deployed at cell sites, aggregation points or centralised points of presence (PoPs) to be able to support cloud RAN, virtual RAN and Open RAN deployments in any way an operator sees fit.

All of this supports the general proposition from Nvidia, as outlined in its AI Aerial strategy, that the optimum way forward for network operators is to deploy high-performance-based computing systems in their networks to be able to support their RAN operations and AI workloads on a common platform that has a very long shelf life.

In theory, this is all very attractive to network operators that have long talked about wanting to avoid deploying multiple networks and platforms to support different services and business opportunities. What could be more enticing than a compute platform that can seemingly do it all?

Show me the money

The answer, at least in this editor’s eyes, is a compute platform that can seemingly do it all without breaking the bank or throwing a network operator’s energy-efficiency strategy out of the window.

And this is where more clarity is needed. Nvidia claims AI Aerial is energy efficient but that’s where the conversation currently ends: It will have to deliver a great deal more data and many proof points before the green concerns of telcos can be overcome.

And then there’s the cost. Mobile operators have spent the past six years collectively pumping tens of billions of dollars into their 5G radio access networks – so far with disappointing return on investment (ROI) results. Those operators need to see a return and will want to put that deployed infrastructure to work for many years to come.

But what Nvidia is proposing is not only a new network architecture that would consign current 5G networks to the networking graveyard but one that would come at an eye-watering cost because, as everyone knows, everyone and their dog wants to get their hands on Nvidia hardware right now and are prepared to pay top dollar for it. Deploying ARC-1 computers in any meaningful way is going to eat up telco capex budgets (and more) – at least in the view of this editor. The AI-RAN concept is very appealing, the economics (and potentially the sustainability impact) not so much.

So, TelecomTV asked Vasishta (twice), might Nvidia, one of the most valuable and profitable companies in the world with a current market capitalisation of $2.9tn, consider some kind of financing package to support telco investment plans and help spread the capex load?

Vasishta didn’t answer the question, instead focusing on the suggestion that telco CFOs might weep at the current economics associated with an AI-RAN deployment. According to the Nvidia executive, the AI Aerial solution will make telco CFOs “very happy as it changes the TCO [total cost of ownership] and return on capital deployed dynamics”.

That’s because, in Nvidia’s view, telcos will want to branch out their businesses into all kinds of AI services and, to do so, they will need to invest in the supporting infrastructure in order to offer GPU-as-a-service (GPUaaS) and hosted AI inferencing services. And if they invest in AI Aerial, they will be able to offer these new revenue-generating services using the same infrastructure that runs their radio access networks while already having a platform that will be ready to support 6G services.

That is Nvidia’s line and it’s sticking to it!

Now, to be fair, Nvidia does have support for this approach, initially from Japan’s SoftBank, which has been trialling AI-RAN for more than a year already, and now of course T-Mobile US, and there will doubtless be others that will want to explore the potential of this approach.

And it’s absolutely true that telcos want in on the AI services action and some of them are already lining up GPUaaS and building what Nvidia refers to as AI factories. What’s notable, though, is that these current deployments by the likes of SK Telecom and Singtel are not part of their RAN strategies – these are datacentre-based deployments specific to AI service offerings, though of course in time they might also support other workloads and applications. It’s early days for all of this, but these current investments are far from the large-scale, combined mobile/AI service-supporting platforms that Nvidia is pitching.

There’s no doubt, though, that network operators will be interested in Nvidia’s RAN strategy and want to know more.

Baseband botheration

The other part of the telecom ecosystem they will want to know more about is the vendor community because Nvidia is now encroaching on their turf. Currently, the ARC-1 proposition will be causing concern at the headquarters of existing baseband chip vendors and will have the traditional RAN vendors wondering how this might play out.

Nvidia’s ARC-1 clearly looks to be a competitive threat to the likes of Broadcom, Marvell, AMD, Intel, Qualcomm (which has had aspirations in the Open RAN sector) and more. It also steps on the toes of Ericsson and Nokia, both of which have their own chip development units. (This will explain why VMware, now owned by Broadcom, isn’t a cloud platform partner for Nvidia’s AI Aerial solution, while Red Hat, Wind River and Canonical are on board.)

It’s also worth considering that, ultimately, telcos might not be too comfortable with the ARC-1 setup as it is all Nvidia technology and, in theory, network operators are trying to avoid vendor lock-in wherever they can rather than lurch from one lock-in scenario to another.

And might AI Aerial/ARC-1 give Ericsson, Nokia, Huawei, ZTE and Samsung Networks, the main traditional RAN technology suppliers to the world’s mobile operators, cause for concern?

Heavy Reading’s Brown thinks Nvidia is likely to play nice with this subset of the ecosystem. Responding to questions from TelecomTV, Brown noted that the chip giant has been “careful to carve out a role for the RAN software vendors”, with Fujitsu, Ericsson and Nokia announced so far. Nvidia “really needs these players on board and managing these relationships will be super critical. There’s also a spot for third-party orchestrators like Wind River and Red Hat… although probably in a somewhat diminished role since it looks like they no longer provide the runtime OS [operating system],” a role taken in AI Aerial by Nvidia’s own software.

Relationship management with these companies will be very important for Nvidia, according to Brown, particularly the major vendors that also have their own in-house silicon teams.

Brown added: “Overall, this is an interesting and positive move. Nvidia in the RAN is innovative and exciting. But it is early days and the company is selling an ambitious pitch – for example, the idea that distributed base stations will serve double duty as ‘GPU-as-a-service’ platforms and referencing today’s GPU spot prices as the economic justification is far-fetched based on a near- to mid-term view of mobile network technology and market conditions.”

Whatever happens, Brown expects Nvidia to play a role in the mobile networking market from here on. He noted that Nvidia’s CEO, Jensen Huang, “really likes telco networking – more than is commonly acknowledged. He rightly sees how important service provider networks are to the rest of the AI story and this gives good confidence that Nvidia is committed to a long-term investment programme. The RAN processor/server market itself would otherwise be barely a blip on Nvidia’s radar.”

And could that investment programme go even further? That’s an idea floated by another industry analyst James Crawshaw, who heads up Omdia’s service provider transformation practice. In this LinkedIn post (which, he notes, reflects his personal view rather than that of his employer), he asks: “If Nvidia’s GPUs are good for Layer 1 and a bit of AI inferencing on the side, then what is to stop Nvidia writing software for Layers 2 and 3? For now, Nvidia seems content to run third-party Layer 2/3 software on its Grace CPUs, but with a [near] $3tn market cap, what is stopping them going the whole hog?”

There’s one to debate over a pint of wine…

- Ray Le Maistre, Editorial Director, TelecomTV

Email Newsletters

Sign up to receive TelecomTV's top news and videos, plus exclusive subscriber-only content direct to your inbox.