SoftBank and Nvidia tie revenue model to new AI-RAN solution

By Guy Daniels

Nov 13, 2024

- SoftBank unveils AITRAS, its first AI-RAN solution developed with Nvidia

- A trial held in Japan demonstrated concurrent AI inferencing alongside RAN workloads

- There’s a lot at stake: $5 of AI inference revenue per $1 of new AI-RAN capex

- SoftBank adopts Nvidia AI Enterprise to create AI marketplace

The prospect of a viable AI-RAN has been given a significant boost with the news of a trial between Japanese telco SoftBank and Nvidia. Using Nvidia’s recently launched AI Aerial accelerated computing platform, SoftBank says it has successfully piloted the world’s first combined AI and 5G network. SoftBank is also now using Nvidia AI Enterprise software to create an AI marketplace that can meet the demand for local, secure AI computing. The new service will support AI training as well as edge AI inference.

The duo have been working towards this point for years already and are both founding members of the AI-RAN Alliance, which was launched in February – see AI-RAN Alliance launches at #MWC24 and Nvidia and SoftBank team on GenAI, 5G/6G platform.

During his keynote speech at the Nvidia AI Summit Japan, company founder and CEO Jensen Huang noted that Japan has a history of pioneering technological innovations: “With SoftBank’s significant investment in Nvidia’s full-stack AI, Omniverse and 5G AI-RAN platforms, Japan is leaping into the AI industrial revolution to become a global leader, driving a new era of growth across the telecommunications, transportation, robotics and healthcare industries in ways that will greatly benefit humankind in the age of AI.”

The outdoor trial was conducted in the Kanagawa prefecture and enabled SoftBank to demonstrate that its Nvidia-accelerated AI-RAN solution can achieve carrier-grade 5G performance, while using the network’s excess capacity to run AI inference workloads.

The premise is that traditional radio access networks (RANs) only operate at about one-third capacity, as they need to be over-provisioned to handle peak traffic loads as and when required. “With the common computing capability provided by AI-RAN, it is expected that telcos now have the opportunity to monetise the remaining two-thirds capacity for AI inference services,” explained Nvidia in a written statement. The two partners estimate that telco operators can earn roughly $5 in AI inference revenue from every $1 of capital expenditure (capex) it invests in new AI-RAN infrastructure.

That's worth repeating: $5 of AI inference revenue per $1 of new AI-RAN capex. TelecomTV reached out to Nvidia for clarification about payback terms and were told that this is estimated over a five-year period.

SoftBank goes further with its calculations and estimates that when taking into account both operating expenditure (opex) and capex costs, it can achieve a return of up to 219% for every AI-RAN server it adds to its infrastructure. It's a compelling equation.

“Shifting from single-purpose to multi-purpose AI-RAN networks can mean five times the revenue for every dollar of capex invested,” said Ronnie Vasishta, senior vice president of telecom at Nvidia. “SoftBank’s live field trial marks a huge step toward AI-RAN commercialisation with the validation of technology feasibility, performance and economics.”

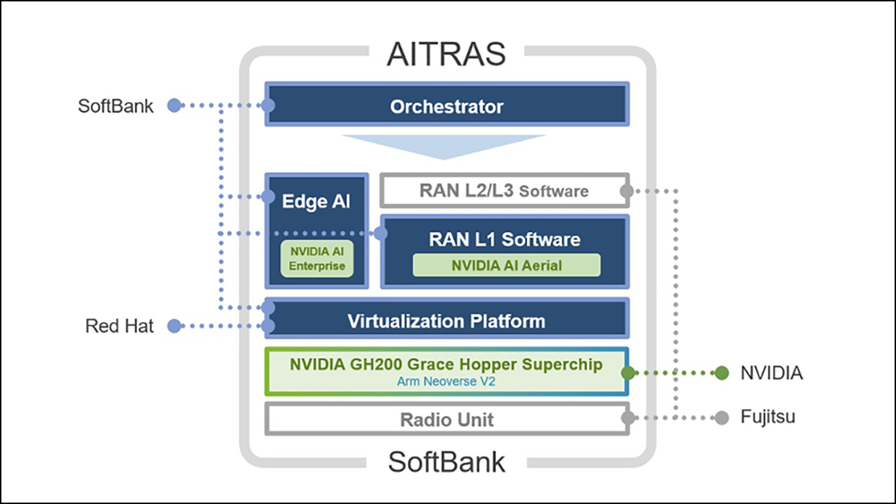

For the trial, SoftBank used Nvidia AI Enterprise to build real-world AI inference applications, including autonomous vehicle remote support, robotics control and multimodal retrieval-automated generation at the edge. Nvidia says that all of these inference workloads were able to run optimally on SoftBank’s AI-RAN network. Fujitsu and Red Hat also contributed to the AI-RAN trial, which SoftBank is calling ‘AITRAS’ – see this Red Hat announcement and this Fujitsu press release.

Inference refers to the process of running pre-trained AI models on previously unseen data to make predictions or decisions. Edge AI inference moves this work away from cloud servers and runs them much closer to the data source, hence faster processing, lower latency, improved security and so on.

SoftBank intends to build an ecosystem that connects the demand and supply of AI technology by using Nvidia AI Enterprise serverless application programming interfaces (APIs) and its in-house developed orchestrator. This will enable SoftBank to route external AI inferencing jobs to an AI-RAN server when computing resources become available, to deliver localised, low-latency, secure inferencing services.

“SoftBank’s AITRAS is the first AI-RAN solution developed through a five-year collaboration with Nvidia,” explained Ryuji Wakikawa, vice president and head of the Research Institute of Advanced Technology at SoftBank. “It integrates and coordinates AI and RAN workloads through the SoftBank-developed orchestrator, enhancing communication efficiency by running dense cells on a single Nvidia-accelerated GPU server.”

SoftBank already has a close connection with Nvidia. The operator’s software-defined 5G radio stack is optimised for Nvidia’s AI computing platform and includes Layer 1 software enhanced by Nvidia’s Aerial CUDA-accelerated RAN libraries. The next step in its network transformation programme is to incorporate Nvidia Aerial RAN Computer-1 systems, which it estimates can use 40% less power than traditional 5G network infrastructure.

“Countries and regions worldwide are accelerating the adoption of AI for social and economic growth, and society is undergoing significant transformation,” said Junichi Miyakawa, president and CEO of SoftBank. “With our extremely powerful AI infrastructure and our new, distributed AI-RAN solution, AITRAS, that reinvents 5G networks for AI, we will accelerate innovation across the country and throughout the world.”

- Guy Daniels, Director of Content, TelecomTV

Email Newsletters

Sign up to receive TelecomTV's top news and videos, plus exclusive subscriber-only content direct to your inbox.

Subscribe